PhD Dissertation Research

Designing an Enabling Computer Interface for Physical Rehabilitation Purposes

—

Designing an Enabling Computer Interface for Physical Rehabilitation Purposes —

On this page you can learn about my award-winning PhD dissertation (Northeastern University).

My research consisted of two main components:

A User Experience Survey (UXS) targeting computer users with various levels of physical disability. This survey sought to quantify the impact of physical disability on computer use, in terms of: positive affect, negative affect, competence, control, and accessibility.

The design and development of an Adaptive Interface Design (AID) that promotes Accessibility, Usability, and Inclusion. I applied agile methods to achieve this goal, executing iterative hardware and software development phases and usability research cycles. The hardware comprised an eye-tracker and data-glove, and the software consisted of Labview and UDP communication on the back-end, combined with a simple video game built in Unity.

For the virtual environment test bed, I started with Unity's beautiful demo island and expanded it by including several challenges such as a coconut throwing game, a bowling game, a puzzle game, and a helicopter ride. All of the interactions were triggered using the Adaptive Interface Design (AID) that I developed. By combining the input of an eye-tracking device and a self-made data-glove, users are able to explore and manipulate their virtual surroundings through natural eye-fixations and intuitive hand gestures.

The remainder of this page includes several captures, schematics, and demonstration videos.

Picture of me receiving the ‘Excellence in Research’ Award from Stephen W. Director and President Joseph E. Aoun at the 2012 Research, Innovation, and Scholarship Expo (RISE:2012).

Visit my

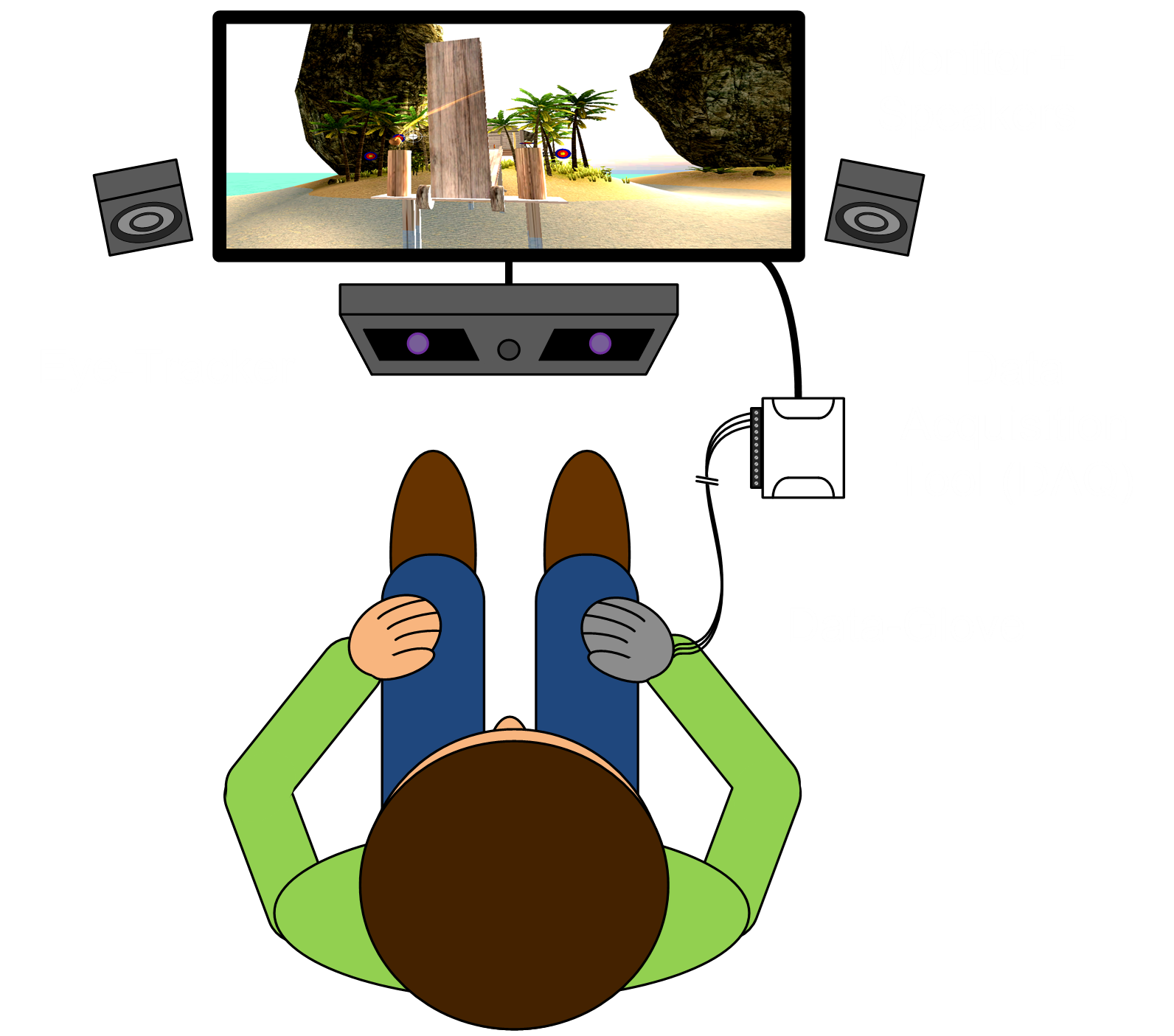

Labeled system overview: display, eye tracker, and data glove.

Data glove schematic explaining sensor placement and operations.

System overview including: data-glove, DAQ, eye-tracker, monitor, and speakers.

Diagram showing how eye fixations will automatically bring objects of interest into center-view.

Overview of game mechanics in the virtual environment: throwing, puzzle, bowling.

Northeastern University

—

Research Innovation & Scholarship Expo (RISE)

—

Demo Video

—

Northeastern University — Research Innovation & Scholarship Expo (RISE) — Demo Video —

NEU RISE - Demo Video